Three Types of People That Hate GPT-5

The launch of GPT-5 has been a beautiful chaos.

While some people reported incredible improvements, others judged it to be completely unusable within the first days of the launch. On Reddit, a thread titled "GPT-5 is horrible" gathered nearly 3,000 upvotes and over 1,200 comments filled with dissatisfied users, while others with early access praised the model as “impressive” and “the best yet”.

It feels impossible that people get such different experiences using the same tool. Some users expressed frustration that GPT-5 continued to make up information and trip over simple tasks, while others on social media platforms celebrated major breakthroughs. So where is this divide coming from? Why do some people love GPT-5, while others absolutely hate it?

I did a bit of digging around this because GPT-5 has been mostly exceptional for me. So who are these people that dare to disagree with my views?

1. The one that’s routed the wrong way

GPT-5 features a new model router that redirects user questions to one of five different models underneath, including specialized variants like gpt-5-main-mini and gpt-5-thinking. The router passes simpler questions to models that are much smaller or respond instantly, while it pauses to "think" on questions that benefit from more consideration.

Just like a first level support agent shields simpler questions from „more expensive“ colleagues, this model router is one of OpenAI‘s main pillars to deal with increasing demand. Especially right after launch, this didn't work very well. According to Sam Altman himself, "The autoswitcher broke and was out of commission for a chunk of the day, and the result was GPT-5 seemed way dumber."

Even after those initial hiccups were fixed, the routing system remains unpredictable. How you phrase your question can still send you to the wrong model, leaving you with a sub-par experience that feels worse than GPT-4o. This is especially frustrating for free users who can't manually select which variant they want. However, explicitly telling the model to "think hard" will usually trigger the thinking mode regardless of your actual question. But needing to game the system for consistent quality feels like a step backward.

2. The one that expected another revolution

This group might have been the most disappointed, not because of technical failures, but because of inflated expectations built up over months of anticipation. Sam Altman did the opposite of grounding expectations by posting images of the Death Star and promising PhD-level intelligence.

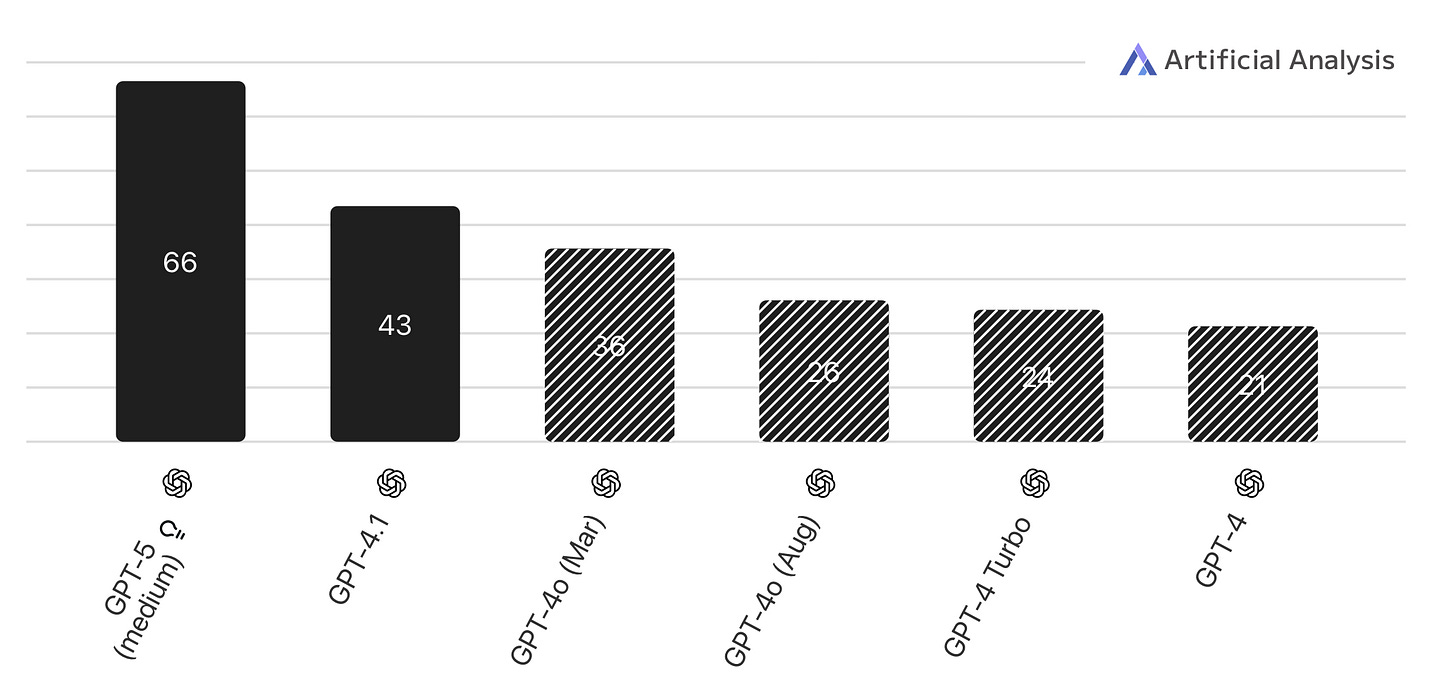

The expectation problem runs deeper than just wanting better performance. Many users were hoping for another "ChatGPT moment" - that feeling of witnessing a fundamental leap in AI capability that we experienced with GPT-4. But OpenAI had been making incremental releases with models like GPT-4 Turbo, GPT-4o, and various versions in between. Each small step up made it harder for any single release to feel revolutionary again.

The steady stream of GPT-4 updates may have accidentally made GPT-5 feel less impressive than it actually is. Each time OpenAI released a slightly better version, our expectations crept higher, so the final jump to GPT-5 didn’t feel as dramatic. But looking at the actual numbers, GPT-5 really is a significant leap forward - it’s just that when you improve things bit by bit, people don’t notice the big moments as much.

3. The one that's emotionally connected

I actively disliked GPT-4o throughout the past 5 months. I thought it was not even close to what some of the competition was offering as their mid-level model and it was absent from most benchmark leaderboards. It felt like OpenAI clearly focused on their o-series of reasoning models like o3, with 4o not getting a lot of attention.

Yet, it was probably the most widely used LLM on earth.

When people started having literal breakdowns and posting petitions on getting 4o back after the GPT-5 launch, I was really surprised. It turns out 4o had a very warm, enthusiastic and fun style in its responses. It performed quite well on LMArena, where users vote for the answers they prefer, which contrasts with the way other scientific benchmarks are evaluated.

The emotional disconnect became even more apparent when users complained about GPT-5's responses. They cited "short replies that are insufficient, more obnoxious AI-stylized talking, and less 'personality'." For these users, the technical improvements didn't matter if the model felt cold and impersonal. They had formed an attachment to 4o’s conversational style that went beyond mere functionality. They literally lost a friend.

Good news then, that OpenAI reactivated GPT-4o from its retirement. I’m eager to see how they address these challenges with their next line of model updates. Doing well on benchmarks isn’t everything.

The truth is, GPT-5's reception says as much about us as users as it does about the model itself. Our relationship with AI is evolving from pure amazement to more nuanced expectations about reliability, personality, and consistent performance. The polarized reactions might actually be a sign that we're entering a more mature phase of AI adoption - one where the technology is good enough that our complaints shift from "does it work?" to "does it work the way I want it to?"